Limit Reached?

Did your chat take the afternoon off?

- Keep going or even change your model

- Spend more slowly with lower-cost options

- Track your spending with ease

- You're never stuck waiting

Features

Vision

LLMs are powerful, but hard to use. Every tool handles prompts, but integrations are typically hard to use.

We're building the bridge between human and AI collaboration with simple, high power tools that are simple to use.

The next wave of productivity tools will be LLM-native, with memory, traceability, cost management, and seamless teamwork built in.

The Limits of AI

AI constantly forgets — even with giant context windows. Why should powerful intelligence require so much spoon-feeding?

Attention Limits

LLMs now accept larger context sizes such as 1m but model attention limits are much smaller. Even the best models forget what happened a few minutes ago.

Usage Limits

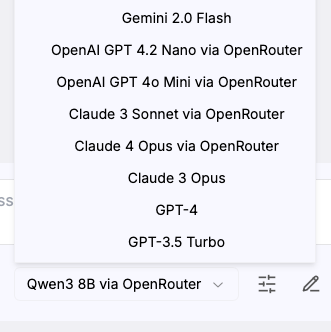

Some products impose limits on your creativity. They want you to use one expensive model, all the time. Free yourself from tyranny!

Manual Labor

Manually pasting prompts into two different chat programs. Spoon-feeding the AI. Repetitive, clerical, fragile, mindless.

Fragmented Workspaces

Are you forced to switch tools to improve your prompts? Sometimes you need different models. Break out of your one-model silos.

No More Amnesia

Your context and work are remembered and reusable. True collaboration with teammates and AI in real-time.

Memory That Persists

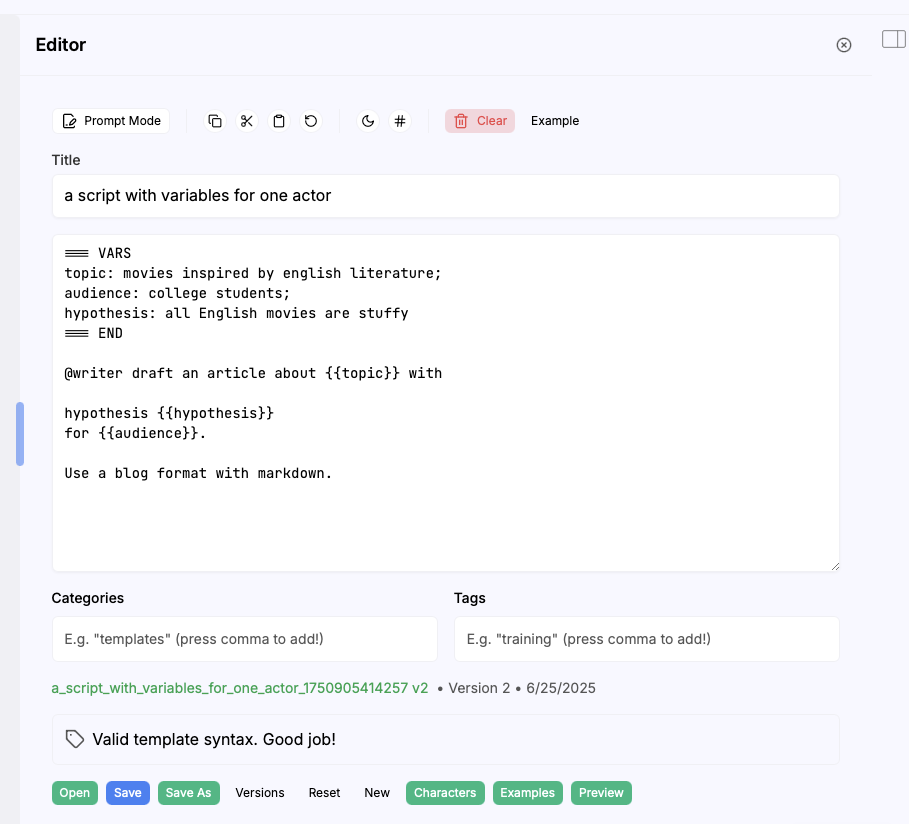

Prompt once, use many times

Teach those pesky LLMs to share memories

Forget when you are ready to forget.

Take control of your memories

Real Collaboration

Invite others to your chat thread

Share chat threads and chat history with teams

Compare responses from different AI agents

Smarter Workflows

Chain from one LLM to another

Use low cost LLM for low cost prompts

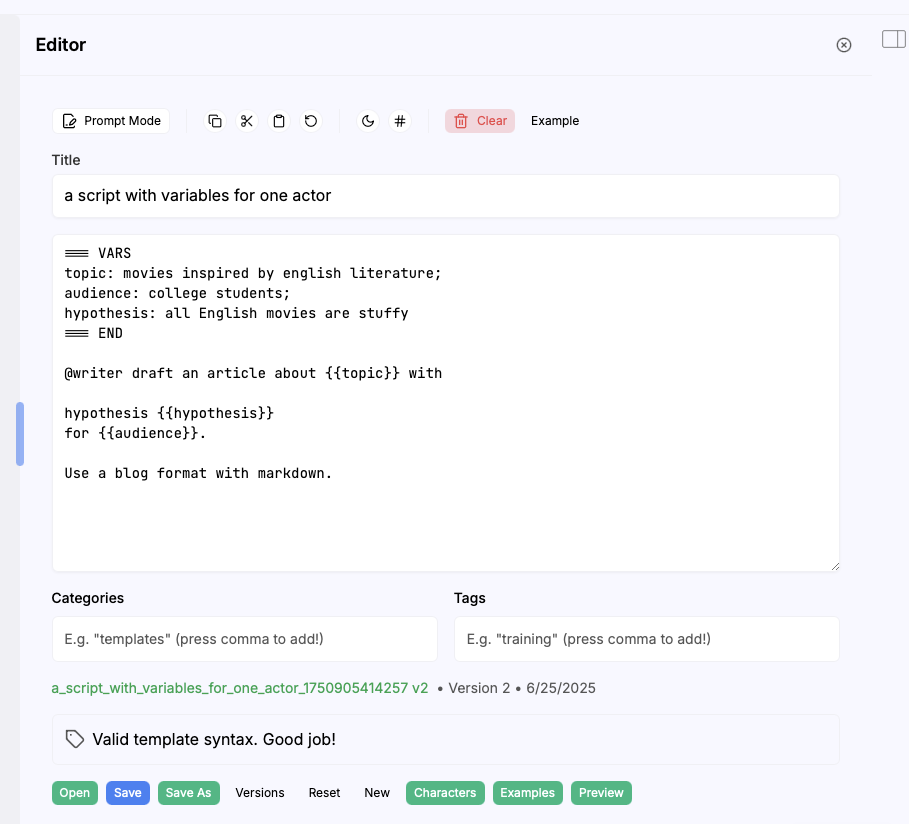

Define reusable prompts for consistency and reliability

Check out our Innovations

Built-in observability, multi-agent workflows, and true collaboration. Work smarter with AI that remembers and teammates who can contribute.

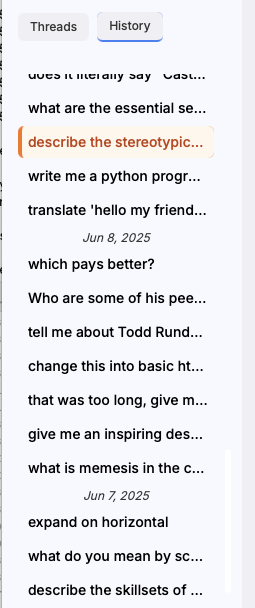

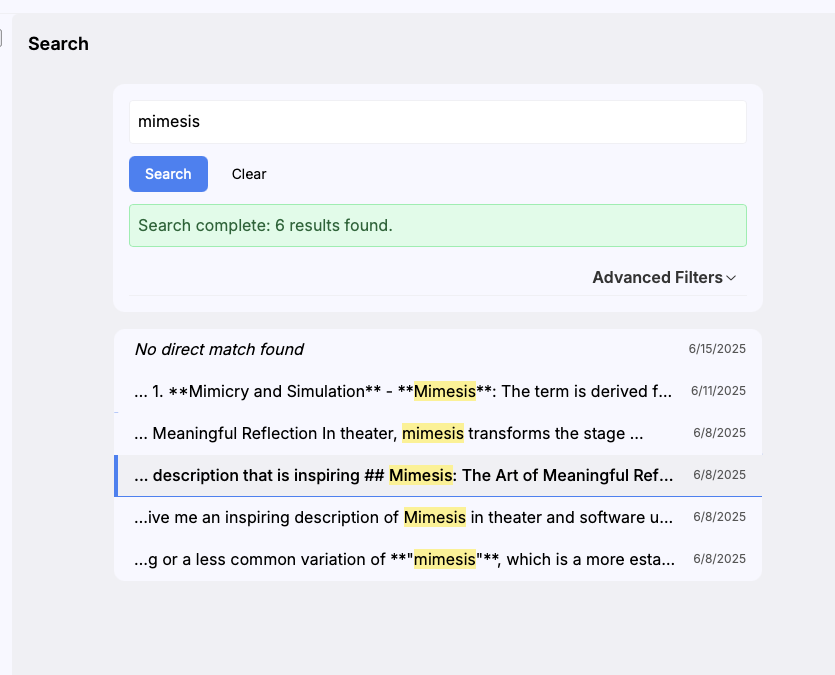

Observability

Complete transparency with history logs, prompts and results saved, and metrics. Track inputs, outputs, token usage, latency, and model versions.

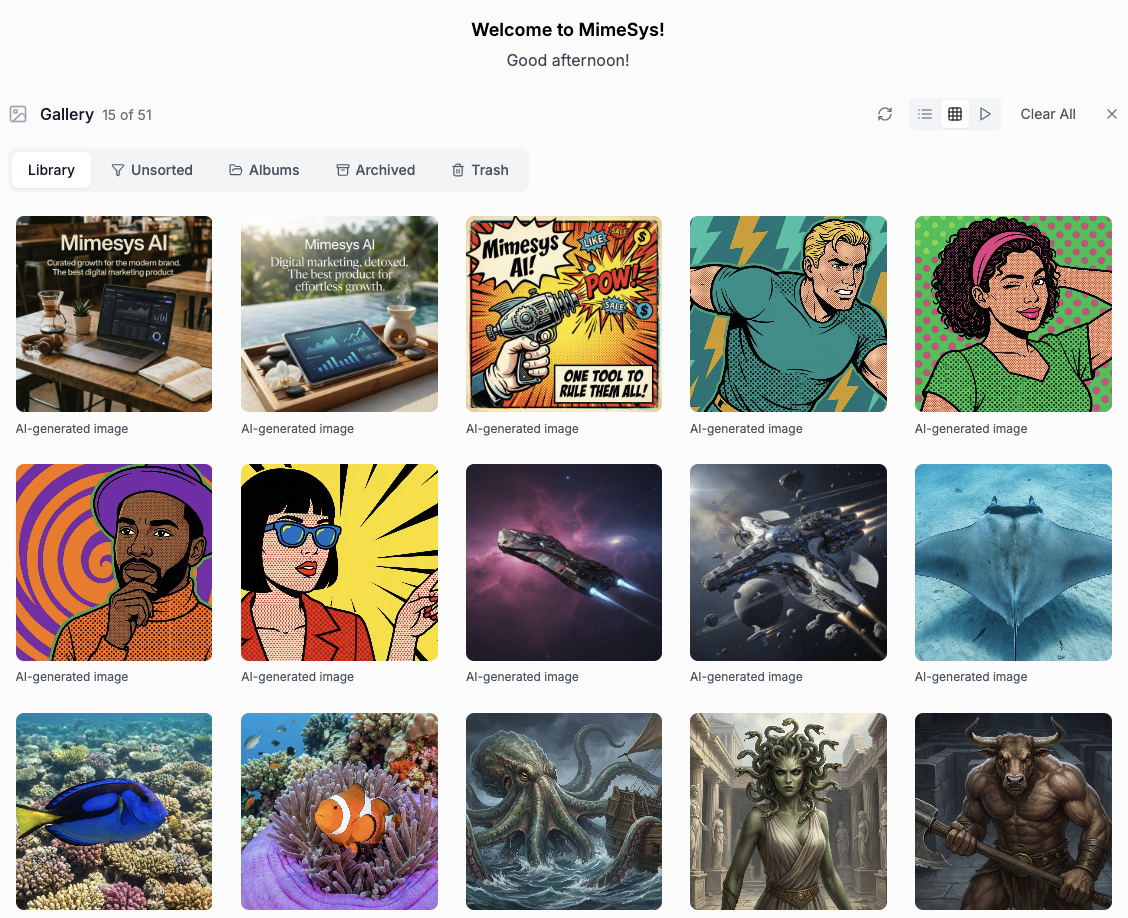

Experiments

Explore cutting-edge AI features and experimental workflows. Test new capabilities, preview upcoming features, and push the boundaries of what's possible with AI.

Multi-Agent Workflows

Workflows with multiple specialized AI agents, agent-to-agent communication, and broadcast messages across agents.

AI-to-AI Collaboration

Co-edit prompts or documents, AI joins the conversation, invite teammates to chat history, share agents and memories.

Memory & Persistence

Your context and work are remembered and reusable. Share memories with more than one AI model. Take control of memory with asynchronous workflows.

AI Engineering

Design, shape, and control AI interactions with precision. Build structured prompts, custom contexts, multi-model comparisons, and repeatable workflows without writing code.